Last active

January 5, 2022 12:44

-

-

Save IgorBaratta/ef1e36c64d7a616f5ddffd57fd0dfa9c to your computer and use it in GitHub Desktop.

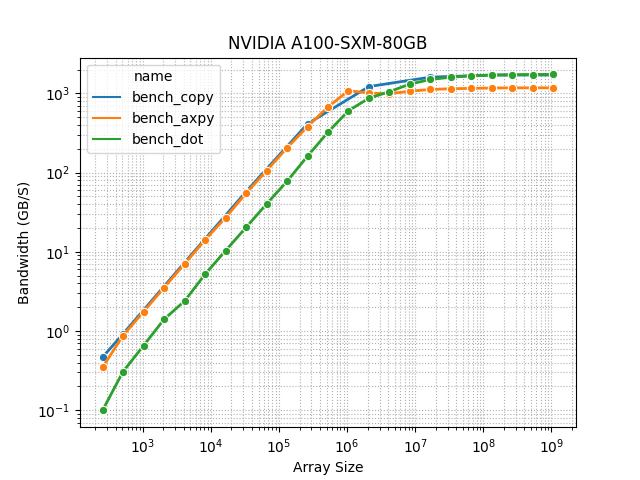

CUBLAS benchmark

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| cmake_minimum_required(VERSION 3.18) | |

| set(PROJECT_NAME BMcublas) | |

| project(${PROJECT_NAME}) | |

| include(CheckLanguage) | |

| check_language(CUDA CXX) | |

| enable_language(CUDA CXX) | |

| # Set C++ standard | |

| set(CMAKE_BUILD_WITH_INSTALL_RPATH TRUE) | |

| set(CMAKE_CXX_STANDARD 17) | |

| set(CMAKE_CXX_STANDARD_REQUIRED ON) | |

| find_package(benchmark REQUIRED) | |

| find_package(CUDA 11.0 REQUIRED) | |

| add_executable(${PROJECT_NAME} main.cpp) | |

| target_include_directories(${PROJECT_NAME} PUBLIC SYSTEM ${CUDA_INCLUDE_DIRS}) | |

| target_link_libraries(${PROJECT_NAME} ${CUDA_LIBRARIES} ${CUDA_CUBLAS_LIBRARIES} benchmark::benchmark) |

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #include "src.hpp" | |

| #include <benchmark/benchmark.h> | |

| #include <chrono> | |

| double value = 1. / 3.; | |

| using namespace std::chrono; | |

| template <typename T> | |

| static void BM_copy(benchmark::State& state) { | |

| std::size_t n = state.range(0); | |

| cublasHandle_t handle; | |

| cublasCreate(&handle); | |

| cudaEvent_t start, stop; | |

| cudaEventCreate(&start); | |

| cudaEventCreate(&stop); | |

| T* x = kernel::init_vector<T>(handle, n, value); | |

| T* y = kernel::init_vector<T>(handle, n, -value); | |

| for (auto _ : state) { | |

| cudaEventRecord(start); | |

| kernel::copy<T>(handle, n, x, y); | |

| cudaEventRecord(stop); | |

| cudaEventSynchronize(stop); | |

| float t = 0; | |

| cudaEventElapsedTime(&t, start, stop); | |

| state.SetIterationTime(t / 1e3); | |

| } | |

| state.SetBytesProcessed(sizeof(T) * 2 * n * state.iterations()); | |

| state.SetLabel("cublas"); | |

| cublasDestroy(handle); | |

| cudaFree(&x); | |

| cudaFree(&y); | |

| } | |

| template <typename T> | |

| static void BM_axpy(benchmark::State& state) { | |

| std::size_t n = state.range(0); | |

| cublasHandle_t handle; | |

| cublasCreate(&handle); | |

| T* x = kernel::init_vector<T>(handle, n, value); | |

| T* y = kernel::init_vector<T>(handle, n, -value); | |

| T alpha = 1.; | |

| cudaEvent_t start, stop; | |

| cudaEventCreate(&start); | |

| cudaEventCreate(&stop); | |

| for (auto _ : state) { | |

| cudaEventRecord(start); | |

| kernel::axpy<T>(handle, n, alpha, x, y); | |

| cudaEventRecord(stop); | |

| cudaEventSynchronize(stop); | |

| float t = 0; | |

| cudaEventElapsedTime(&t, start, stop); | |

| state.SetIterationTime(t / 1e3); | |

| } | |

| state.SetBytesProcessed(sizeof(T) * 2 * n * state.iterations()); | |

| state.SetLabel("cublas"); | |

| cublasDestroy(handle); | |

| cudaFree(&x); | |

| cudaFree(&y); | |

| } | |

| template <typename T> | |

| static void BM_dot(benchmark::State& state) { | |

| std::size_t n = state.range(0); | |

| cublasHandle_t handle; | |

| cublasCreate(&handle); | |

| T* x = kernel::init_vector<T>(handle, n, value); | |

| T* y = kernel::init_vector<T>(handle, n, -value); | |

| cudaEvent_t start, stop; | |

| cudaEventCreate(&start); | |

| cudaEventCreate(&stop); | |

| for (auto _ : state) { | |

| cudaEventRecord(start); | |

| T result = kernel::dot<T>(handle, n, x, y); | |

| benchmark::DoNotOptimize(result); | |

| cudaEventRecord(stop); | |

| cudaEventSynchronize(stop); | |

| float t = 0; | |

| cudaEventElapsedTime(&t, start, stop); | |

| state.SetIterationTime(t / 1e3); | |

| } | |

| state.SetBytesProcessed(sizeof(T) * 2 * n * state.iterations()); | |

| state.SetLabel("cublas"); | |

| cublasDestroy(handle); | |

| cudaFree(&x); | |

| cudaFree(&y); | |

| } | |

| BENCHMARK_TEMPLATE(BM_copy, double) | |

| ->RangeMultiplier(2) | |

| ->Range(1 << 8, 1 << 29) | |

| ->UseManualTime(); | |

| BENCHMARK_TEMPLATE(BM_axpy, double) | |

| ->RangeMultiplier(2) | |

| ->Range(1 << 8, 1 << 29) | |

| ->UseManualTime(); | |

| BENCHMARK_TEMPLATE(BM_dot, double) | |

| ->RangeMultiplier(2) | |

| ->Range(1 << 8, 1 << 29) | |

| ->UseManualTime(); | |

| int main(int argc, char** argv) { | |

| kernel::print_device_info(); | |

| ::benchmark::Initialize(&argc, argv); | |

| if (::benchmark::ReportUnrecognizedArguments(argc, argv)) | |

| return 1; | |

| ::benchmark::RunSpecifiedBenchmarks(); | |

| } |

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #include <cuda_runtime.h> | |

| #include <stdexcept> | |

| #include <type_traits> | |

| #include "cublas_v2.h" | |

| template <class T> | |

| struct unrecognized_type : std::false_type {}; | |

| namespace kernel { | |

| void print_device_info() { | |

| int num_devices; | |

| cudaGetDeviceCount(&num_devices); | |

| for (int i = 0; i < num_devices; i++) { | |

| cudaDeviceProp prop; | |

| cudaGetDeviceProperties(&prop, i); | |

| double bandwidth = 2.0 * prop.memoryClockRate * (prop.memoryBusWidth / 8) / 1.0e6; | |

| std::cout << "Device Number: " << i << std::endl; | |

| std::cout << "\tDevice name: " << prop.name << std::endl; | |

| std::cout << "\tShared memory available per block (kB): " | |

| << prop.sharedMemPerBlock / 1e3 << std::endl; | |

| std::cout << "\tGlobal memory available (GB): " << prop.totalGlobalMem / 1e9 | |

| << std::endl; | |

| std::cout << "\tPeak Memory Bandwidth (GB/s): " << bandwidth << std::endl; | |

| } | |

| std::cout << std::endl; | |

| } | |

| void assert_cuda(cudaError_t e) { | |

| if (e != cudaSuccess) | |

| throw std::runtime_error(" Unable to allocate memoy - cublas error"); | |

| } | |

| template <typename T> | |

| T* init_vector(cublasHandle_t handle, std::size_t n, T value) { | |

| T* x; | |

| assert_cuda(cudaMalloc(&x, n * sizeof(T))); | |

| assert_cuda(cudaMemset(x, value, n * sizeof(T))); | |

| return x; | |

| } | |

| template <typename T> | |

| void copy(cublasHandle_t handle, std::size_t n, T* x, T* y) { | |

| if constexpr (std::is_same<T, double>()) | |

| cublasDcopy(handle, n, x, 1, y, 1); | |

| else if constexpr (std::is_same<T, float>()) | |

| cublasScopy(handle, n, x, 1, y, 1); | |

| } | |

| template <typename T> | |

| void axpy(cublasHandle_t handle, std::size_t n, T alpha, T* x, T* y) { | |

| if constexpr (std::is_same<T, double>()) | |

| cublasDaxpy(handle, n, &alpha, x, 1, y, 1); | |

| else if constexpr (std::is_same<T, float>()) | |

| cublasSaxpy(handle, n, &alpha, x, 1, y, 1); | |

| } | |

| template <typename T> | |

| T dot(cublasHandle_t handle, std::size_t n, T* x, T* y) { | |

| T result = 0; | |

| if constexpr (std::is_same<T, double>()) | |

| cublasDdot(handle, n, x, 1, y, 1, &result); | |

| else if constexpr (std::is_same<T, float>()) | |

| cublasSdot(handle, n, x, 1, y, 1, &result); | |

| return result; | |

| } | |

| } // namespace kernel |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Make:

mkdir build cd build cmake .. makeRun: